Introducing Multimodal AI: the key trend in machine learning KellyOnTech AI series

This is a painting generated by artificial intelligence. Do you like it? Let’s talk about multimodal AI today.

What is Multimodal AI?

Multimodal AI is a new AI paradigm that combines various data types (image, text, speech, numerical data) with multiple intelligent processing algorithms to achieve higher performance. Multimodal AI is one of the major trends in machine learning.

What is the use of multimodal AI?

Humans have a lot of common-sense knowledge about the world. For example, if we talk about dogs, you may have thought about how cute your dog looks, how fluffy it feels, barking, and so on. This commonsense knowledge is usually acquired through a combination of visual, verbal, and sensory cues.

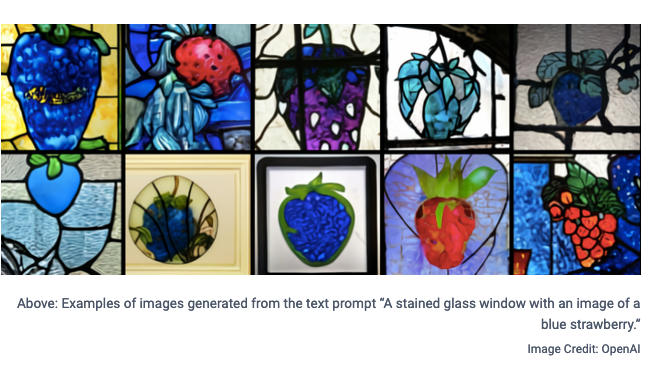

Text to image generation

One of the uses of multimodal AI is to generate images from text. One of the most famous models for this is OpenAI’s DALL-E. For example, a church wants to decorate the colored windows with a blue strawberry pattern. How would you design it? Let’s take a look at the design patterns of artificial intelligence. How about them?

Smart Voice Systems or Smart Assistants

Another application of multimodal AI is a smart voice system or a smart assistant. For example, if I want to buy a light blue silk shirt, the smart assistant needs to be able to distinguish the colour and the material of the shirt, and then provide recommendations accordingly.

Automatically generate video captions or comments

Microsoft Research Asia and Harbin Institute of Technology have jointly created a system that learns to capture audio or video captions and comments, and is then able to automatically provide captions or comments related to scenes in the video.

Predicting video conversations

Google’s research on multimodal AI lies in addressing the AI’s prediction of the next line of dialogue in a video. What is the use of this? For example, if you try to cook a dish but forget the next step. This is where the smart assistant comes in. It can tell you what the next step is right away.

Japanese anime translation

Multimodal AI has also been used in Japanese anime translation. There are a lot of words in bubbles in Japanese anime that are hard to translate. The University of Tokyo in Japan and machine translation startup Mantra have designed a prototype system that can translate text in bubbles.

Which multimodal AI company is worth watching?

Today I introduce a company — Hangzhou Lianhui Technology, established in 2003.

OmModel multimodal pre-trained large models

Lianhui Technology has a multi-modal pre-training large model called OmModel, based on large-scale self-supervised learning multimodal AI algorithms, which has completed industry-based large-scale pre-training of billion-level pictures, 10,000-level videos and billion-level graphics. It achieves a more accurate AI model with a smaller number of labelled samples, integrates more modal information, and obtains a more accurate AI model with internationally advanced performance.

Use case — Media Big Data Analysis

Lianhui Technology’s system adopts natural language understanding and vector database technology, so that the machine can not only read articles, but also understand audio and video, and interpret news information in all aspects, thus helping media workers to quickly discover news clues, discover the hot issues that users really care about, and use data to objectively analyze different views of media, experts, and users. The system currently aggregates data from 100,000+ websites in the whole network, and tracks more than 600,000+ pieces of news information every day.

The system won the Science and Technology Innovation Award from the State Administration of Radio, Film and Television of China.

Use case — Smart Energy

Lianhui Technology’s system can also be used in energy enterprises. In response to the monitoring needs of coal mines, electric power, oil fields and other energy enterprises for important facilities, instrument data, personnel safety, etc. The visual pre-training model is used to dynamically analyze the production environment of energy enterprises and establish the multi-dimensional cognitive knowledge base of people, equipment and environment that realizes the comprehensive analysis and application of safety behaviours, operation specifications, and production energy efficiency, to achieve unsupervised monitoring.