Virtual Pioneer: OpenAI’s Sora A Must-Know Insight for Business Leaders KellyOnTech

This issue discusses the insights that business leaders should understand about OpenAI’s text to video model named Sora. Let’s start with two points. First we will review what the technical advantages of Sora are, and secondly Sora is a key enabler in the metaverse or virtual world.

What Are the Technical Advantages of Sora

What exactly are the advantages of the text-to-video model Sora? Let’s take a look at a few videos generated by different models based on the same prompt words shown as below.

“A half duck half dragon flies through a beautiful sunset with a hamster dressed in adventure gear on its back.”

The reason why Sora’s technical route is leading is that it has two “strong legs”: the Diffusion model and the Transformer model used by GPT.

Diffusion Model

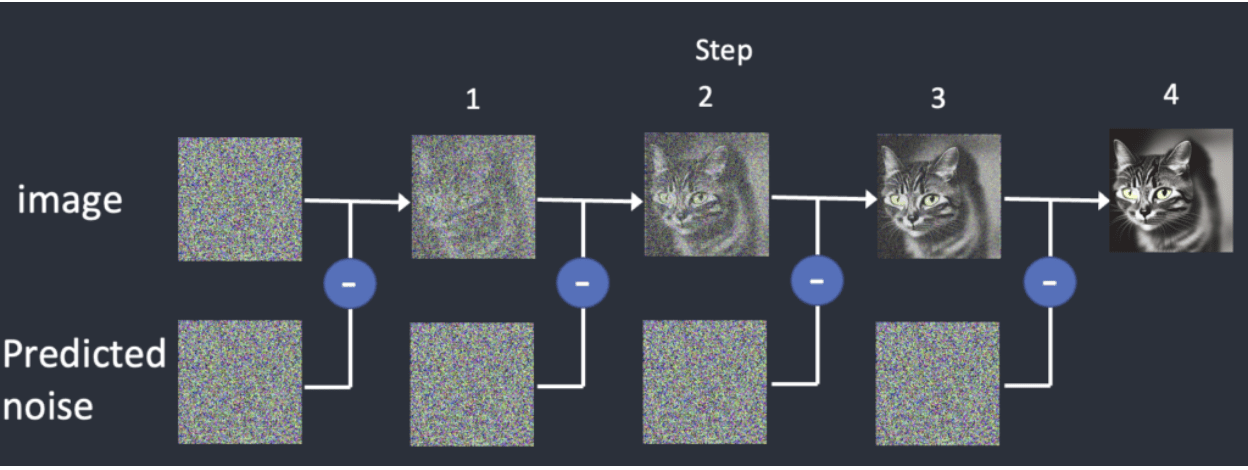

Simply put, the process of generating images using the Diffusion model is a process from blur to clarity. I will explain it using the video I made in July 2022.

The diffusion algorithm first gives a general image, and then gradually adds so-called noise, which is details, to the image. When enough noise is added, the image is close to the one we want to see. The benefit of the Diffusion model is that it is easy to train with a simple and efficient loss function and can generate highly realistic images. Outperforming GANs (Generative Adversarial Networks) in this regard.

Transformer Model

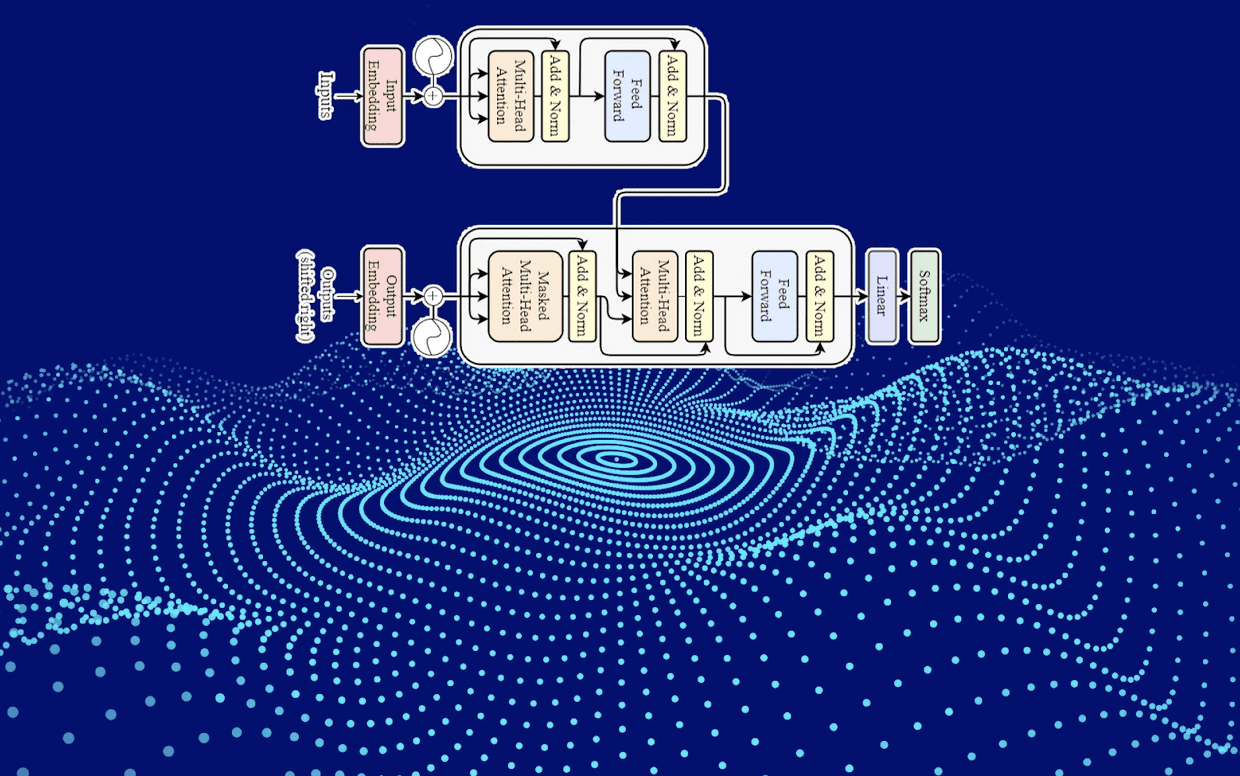

Another model used by Sora is the Transformer model used to train GPT.

The transformer model is a neural network that learns context and meaning by tracking the relationships in sequential data, such as the words in this sentence. Transformer model consists of encoder and decoder. For example, the sentence “The cat sat on the mat.” Each word in the sentence is represented as a vector, and these vectors are called embeddings. The encoder takes the input sentence and processes it word by word, using an attention mechanism to focus on the relevant parts of the sentence, and then updates the embedding based on the context of the sentence.

Once the input encoding is complete, the decoder takes over. It uses the encoded information in the input sentence to generate output word by word. At every step, the decoder uses an attention mechanism to focus on the relevant parts of the input sentence and generates the next word in the output sequence.

To summarize, the Transformer model uses an attention mechanism to process the input sequence and generate the output sequence. It excels at tasks such as language translation, where the length of the input and output sequences is variable, and context needs to be understood.

Why Is Sora a Key Enabler of the Virtual World

Previously I introduced why ChatGPT will disrupt the open metaverse or virtual world, and the emergence of Sora has further accelerated the formation of the virtual world.

What are the benefits of videos? Texts and pictures are flat, while videos add spatial dimensions and present a three-dimensional world, coupled with the time dimension to create a four-dimensional space. This will make the virtual world more real. One of the reasons why previous AI applications, such as chatbots, were not as effective as they should have been, are that the AI was unable to determine how a sentence should be followed, which means there is a lack of continuity. The emergence of Sora solves this problem.

We often say that to cultivate a person’s abilities, in addition to reading thousands of books, it is best to travel thousands of miles. Both two aspects can now be achieved with artificial intelligence. With the amount of data and data types continuing to enrich, the emergence of AI Emergence is a matter of time, and Sam Altman is accelerating the process. He wants $7 trillion to invest in order to build the world’s largest connected large model, which requires connecting 10 million top-tier GPUs and increasing investment in computing power and energy storage.

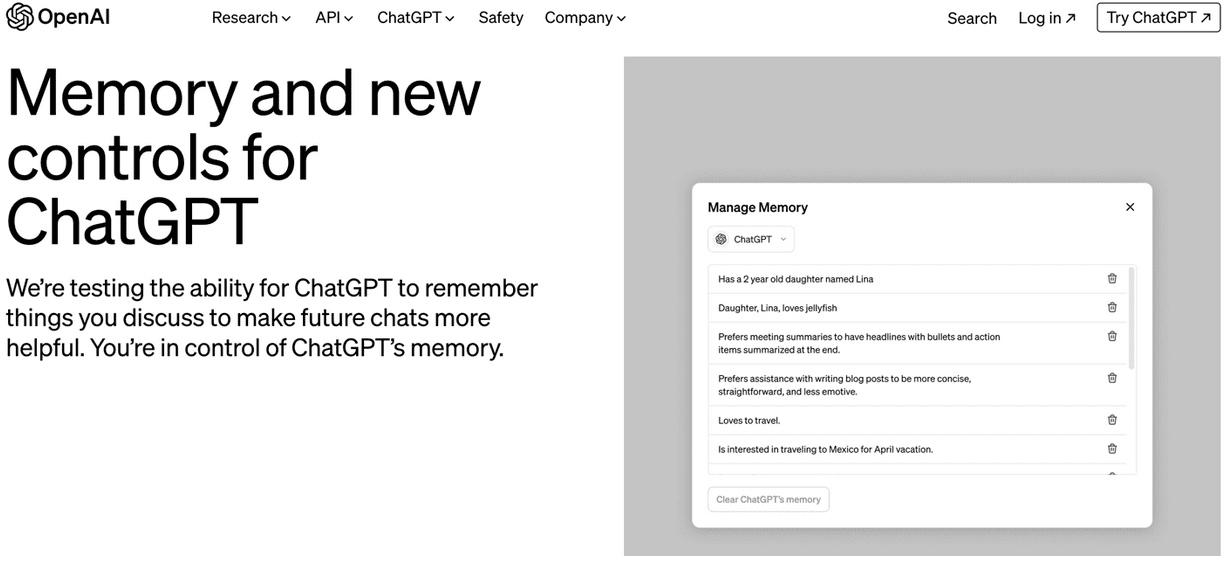

What Does OpenAI’s New Function Memory Mean

The Memory feature OpenAI is testing can remember what users have discussed with ChatGPT, making future chats more targeted in the future. The website also emphasizes that everyone as a user together jointly control over what kind of memory ChatGPT will have. Thinking deeper, what does this mean?

Recall the scene of our conversation where we listen to what the other person is saying, determine what the key information is and memorize it, and then give the other person feedback based on what we know to complete a round of dialogue. Perhaps in the near future, AI will become the one to drop the ball and keep us company in conversation based on how much each of us understands.

Since ancient times, our ancestors have discussed whether human nature is inherently evil or inherently good.

The world’s major religions are all advocating people to be kind because we know that humans are complicated. AI learns how the human world survives and behaves through all kinds of information generated by humans, whether it is texts, novels, pictures, videos and so on. Do you think AI learns more good or bad things about human nature? In the near future, in the AI-controlled virtual world, how much goodness can we anticipate in its principles of conduct?