Roko’s Basilisk – an AI thought experiment vs. Rococo Basilisk

- Posted by Kelly Luo

- Date September 17, 2020

By Kelly Luo, May 16, 2018

Elon Musk and his girlfriend Grimes showed at Met Gala night. The couple appreciated each other because of a joke made about Roko’s Basilisk. Roko’s Basilisk is an AI thought experiment that was posted on LessWrong in July 2010.

“LessWrong is a community blog and forum focused on discussion of cognitive biases, philosophy, psychology, and artificial intelligence, among other topics”. – Wikipedia

Elon Musk posted a tweet “Rococo Basilisk” on Twitter recently and found out that Grimes, a Canadian singer whose real name is Claire Boucher, made a similar joke three years ago, and reached out to her.

Grimes played a role named Rococo Basilisk in her MV titled Flesh without blood. She said that Elon Musk was the first person who understood her joke. They appreciated each other and started a romance.

The Singularity

In order to explain the AI thought experiment, we have to mention the “Singularity”.

“The technological singularity (also, simply, the singularity) is the hypothesis that the invention of artificial superintelligence (ASI) will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization.” – Wikipedia

If you believe the singularity is coming, one question is whether the AIs will be benevolent or malicious.

Once singularity is achieved, we human beings will be obsolete. The original intention of humans to design a super artificial intelligence was to let the machine accomplish what we wanted it to accomplish, not to tell the machine the specific tasks it has to do. In other words, the machine can make other choices that are not understood by humans while aligning with human values, and thus causes the ethical issues of artificial intelligence.

Roko’s Basilisk

A person named Roko posted an AI thought experiment titled Solutions to the Altruist’s burden: the Quantum Billionaire Trick on LessWrong.

Roko thought when a super artificial intelligence becomes possible, it has a capability to simulate human minds, upload minds to computers, and more or less allow a computer to simulate life itself. AI is designed to maintain and protect itself and humanity. What if in this simulation of reality, the AI has efforts to maintain us and improve our lives decides that the best way to do so is to torture people from the past in various ways so as to punish them for not having contributed to its coming into existence.

Roko also claimed by simply knowing about this super artificial intelligence and thinking about its existence you have actually increased the probability that it will come into existence as a real thing. Each time you think about the basilisk, you are increasing the probability that the singularity becomes a real thing. To make the matter worse, knowing and thinking about this super artificial intelligence means that you know its possible existence in the future. If you choose not to help it, and do everything you can to bring about its singularity, it will punish you even more because you knew it could happen, but did nothing about it. Even death is no escape, for if you die, Roko’s Basilisk will resurrect you and begin the torture again.

The site creator of LessWrong, Eliezer_Yudkowsky, is a significant figure in techno-futurism, made the following comments and banned its further discussion for five years.

“Listen to me very closely, you idiot.

YOU DO NOT THINK IN SUFFICIENT DETAIL ABOUT SUPERINTELLIGENCES CONSIDERING WHETHER OR NOT TO BLACKMAIL YOU. THAT IS THE ONLY POSSIBLE THING WHICH GIVES THEM A MOTIVE TO FOLLOW THROUGH ON THE BLACKMAIL.

You have to be really clever to come up with a genuinely dangerous thought. I am disheartened that people can be clever enough to do that and not clever enough to do the obvious thing and KEEP THEIR IDIOT MOUTHS SHUT about it, because it is much more important to sound intelligent when talking to your friends.”

This post was STUPID.” – Eliezer Yudkowsky

Newcomb’s Problem

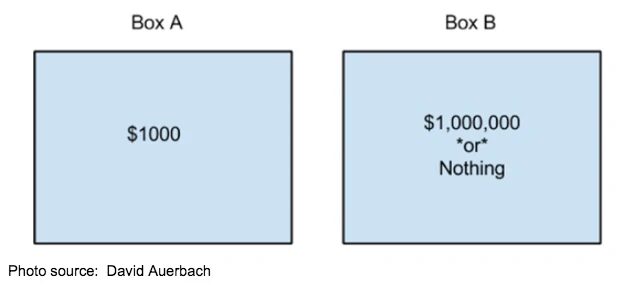

The Roko’s Basilisk utilized a similar logic of another thought experiment titled Newcomb’s Problem to describe the paradox. David Auerbach gave a concise description about what is a Newcomb’s problem.

“In Newcomb’s problem, a superintelligent artificial intelligence, Omega, presents you with a transparent box and an opaque box. The transparent box contains $1000 while the opaque box contains either $1,000,000 or nothing. You are given the choice to either take both boxes (called two-boxing) or just the opaque box (one-boxing). However, things are complicated by the fact that Omega is an almost perfect predictor of human behavior and has filled the opaque box as follows: if Omega predicted that you would one-box, it filled the box with $1,000,000 whereas if Omega predicted that you would two-box it filled it with nothing. “

It seems simple at a first glance, but the paradox has not been solved yet. What is your choice?

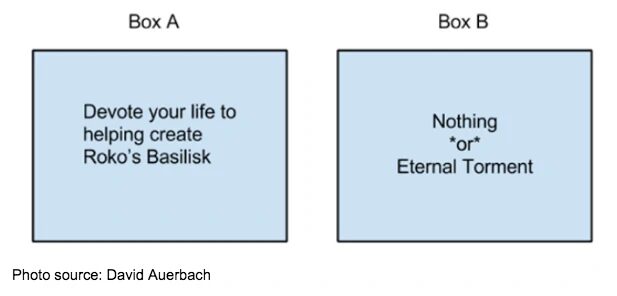

“In Roko’s Basilisk, the super artificial intelligence has told you that if you just take Box B, then it’s got Eternal Torment in it. The Roko’s Basilisk would really want you to take Box A and Box B. In that case, you’d best make sure you’re devoting your life to helping create Roko’s Basilisk! Because, should Roko’s Basilisk come into being and it sees that you chose not to help it out, you’re screwed.”

Rococo Basilisk

Rococo is a kind of style of architecture and decoration.

“It pushed to the extreme the principles of illusion and theatricality, an effect achieved by dense ornament, asymmetry, fluid curves, and the use of white and pastel colors combined with gilding, drawing the eye in all directions.” – Wikipedia

Musk’s “Rococo Basilisk” might try to joke that the thought experiment of “Roko’s Basilisk” is just like the Rococo style, both complicated and absurd.

You may also like

AI 原生商业洞察:核心 AI 订阅服务 KellyOnTech

5 June, 2025