AI Hallucination Survival Guide: Case Studies, Causes, and Prevention Strategies

Have You Ever Been “Fooled” by AI?

— The $5,000 Lesson from a Lawyer

Let’s start with a real case: Steven A. Schwartz, a veteran lawyer with over 30 years of experience, was fined $5,000 for submitting AI-generated false information in court.

In 2023, Schwartz represented Roberto Mata in a lawsuit against Avianca Airlines. Mata claimed he injured his knee after being struck by a metal food cart during a flight. Schwartz used ChatGPT for legal research to support his case and cited multiple “court cases” in his legal brief. However, the judge soon discovered that these cases didn’t exist in any legal database.

Schwartz later recalled that he specifically asked ChatGPT whether the cases were real, and the AI confidently assured him they were.

Unfortunately, he was misled by AI hallucinations.

Today, let’s talk about AI hallucinations — why AI sometimes makes things up and how to avoid being misled by it.

What Is AI Hallucination?

AI Hallucination is when the content generated by a large language model like ChatGPT looks reasonable but is completely fictitious, inaccurate, or even misleading.

For example:

You ask AI: “Who invented time travel?”

AI responds: “Dr. John Spacetime invented time travel in 1892 and was awarded the Nobel Prize in Physics for his discovery.”

Sounds fascinating, right? But there’s a problem — it’s completely false! Dr. John Spacetime doesn’t exist, time travel hasn’t been invented, and the Nobel Prize wasn’t even established until 1901.

How Does AI Hallucination Happen?

According to a research team led by Professor Shen Yang at Tsinghua University, AI hallucinations mainly stem from five key issues:

1. Data Availability Issues — AI relies on training data that may be incomplete, outdated, or biased.

2. Limited Depth of Understanding — AI struggles with complex questions and often makes assumptions.

3. Inaccurate Context Interpretation — AI may misinterpret the context of a query, leading to misleading responses.

4. Weak External Information Integration — AI cannot access or verify real-time external information and depends solely on existing data.

5. Limited Logical Reasoning & Abstraction — AI often makes logical reasoning and abstract thinking errors, especially for complex tasks.

Types of AI Hallucinations

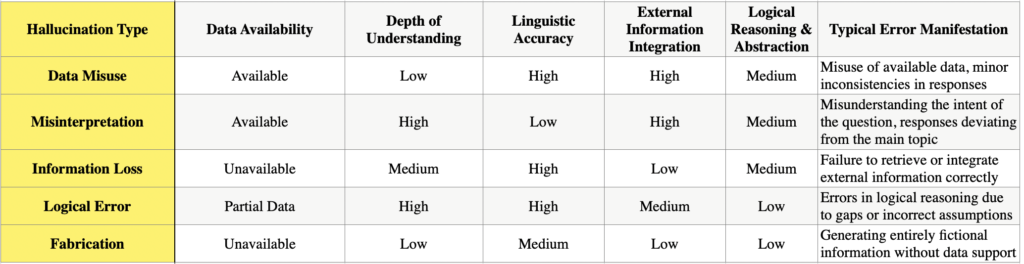

Based on these factors, AI hallucinations can be categorized into five main types:

1. Data Misuse — AI misinterprets or incorrectly applies data, resulting in inaccurate outputs.

2. Context Misunderstanding — AI fails to grasp the background or context of a query, leading to irrelevant or misleading answers.

3. Information Fabrication — AI fills gaps with made-up content when lacking necessary data.

4. Reasoning Errors — AI makes logical mistakes, leading to incorrect conclusions.

5. Pure Fabrication — AI generates entirely fictional information that sounds plausible but has no basis in reality.

Tips to Protect Yourself from AI Hallucinations

AI hallucinations are inevitable, but you can reduce the risk of being misled by improving how you interact with AI. Here are two simple yet effective strategies:

1. Give Clear Instructions — Don’t Make AI “Guess”

— Be specific: Vague prompts can cause AI to “fill in the blanks” with incorrect information. Instead of asking, “Tell me some legal cases,” ask, “List U.S. federal court cases related to aviation accidents from 2020.”

— Set boundaries: Define limits for AI responses, such as “Use Xiaomi’s 2024 Financial Statement.”

— Request sources: Ask AI to provide citations or references so you can verify the information.

2. Verify AI’s Output — Don’t Trust It Blindly

— Check sources: If AI provides references, make sure they exist and are credible. Verify citations from websites or academic papers.

— Stay skeptical: Treat AI-generated content as a reference, not absolute truth. Use your own expertise and common sense to assess accuracy.

— Cross-check with other tools: Use multiple AI platforms to answer the same question and compare the results.

Remember, no matter how smart AI seems, it’s just a tool — the real judgment lies with you. Instead of getting tricked by AI, learn how to outsmart it!

Key Considerations for Choosing an AI Hallucination Detection Tool

With the rise of AI-generated content, many companies now offer solutions to help businesses detect and mitigate AI hallucinations. While I do not endorse specific providers, here are some key factors to consider when making a selection.

1. Core Evaluation Criteria

The most important aspect is assessing how the tool conducts fact-checking. Look for:

— The evaluation metrics it uses to measure AI accuracy.

— Whether it provides detailed explanation reports that clearly identify hallucinations, explain their causes, and cite reliable sources.

2. Advanced Features to Match Your Needs

Depending on your company’s specific use case, consider whether the tool offers:

— Real-Time Verification Pipelines — Detects and corrects hallucinations as AI generates content.

— Multimodal Fact-Checking — Simultaneously verifies text, images, and audio for accuracy.

— Self-Healing AI Models — Automatically corrects inaccurate outputs without human intervention.

— Enterprise-Specific Knowledge Integration — Custom AI fact-checking models tailored to private datasets.

3. Unique Differentiators

Some providers offer specialized features that may align with your company’s budget and requirements, such as:

— Synthetic Data Generation for Hallucination Training — Creates controlled datasets to enhance AI verification models.

— Crowdsourced Human Review — Combines AI detection with expert reviewers for hybrid verification.

— Legal & Compliance Fact-Checking — Monitors AI-generated content for regulatory and contractual compliance.

— Proprietary Transformer-Based Verification — Uses a unique AI architecture optimized for detecting hallucinations.

Choosing an AI hallucination detection tool is fundamentally about balancing the Accuracy–Cost–Scalability triangle. It’s essential to address current business pain points, pinpoint the affected processes, weigh costs against benefits, and ensure flexibility for future tech upgrades and expansion.

中文版